Structuring the Unstructured: Document Processing

Written for as a Tech Note for World Food Programme Analytics HubIntroduction

Most of the information available for us to dissect and interpret is in documents. Documents are a type of data which is called “unstructured data” or “dark data” This is because they cannot be processed in a structured way for they inherently lack a generalized structure and they have all kinds of elements: text, tables, all types of charts, citations, titles, and the list will go on. When you look at a document, what are the containers that have the information you are looking for? All things considered, let’s assume that the containers of information are:

Table of contents

Titles

Paragraphs

Tables

Charts

Now how can we extract these containers, store them and communicate them to users?

Object-detection and Image Processing

One thing you can do is treat a document like a zipped file containing images. Each image is just a number of objects organized in a certain way. Now, a whole new horizon is available for us, the horizon of object detection, and the objects we need to detect are our information containers that we specified. The task we have now is a vision task, and a classification task at the same time. The question to ask now is what tools do we have in hand to perform this task? We will obviously need vision models that can dissect images. There is a huge number of those available for public use, and you can find a lot of them here. Each of them uses researched deep learning methods like transformers, CNNs, and more. The challenge is that those object detectors are not exposed to documents similar to what we have at WFP. At the core, such models are expected to detect and classify. For them to be able to infer that some specific pixels are actually a table, they need to be exposed to a plethora of tables beforehand, which is what is called training or fine-tuning in some cases. This brings us to the question of what will they be trained on?

Getting Technical

Scraping the web, you can find datasets already labeled and ready to go but they will almost always not be specific enough for your documents. This is what I tried using Roboflow. Roboflow is a “computer vision developer framework,” where it makes image labeling and image data processing, model training and deployment easier. Roboflow was chosen here because it is a free tool which is designed specifically for the task we have here. It allows uploading images, annotating them, training a model on the platform or calling the data using API in any format for training locally on any python script. On Roboflow, I found this dataset which contains around 2700 images of document pages already labeled with 24 classes of document parts including: tables, charts, titles, subtitles, abstracts, charts, paragraphs, equations, and more. I trained DETR and Yolov8 and other object detection models on this dataset. However, Yolov8 showed the fastest and best results even when asked to detect on a batch of images. This is expected given that Yolo (You Only Look Once) models are the state-of-the-art models for object detection. For more information about the architecture and idea behind Yolo models take a look at this article. The fine-tuned models were now tested on WFP’s 2022 Annual Country Reports. Shown below are some of what Yolov8 could detect and couldn’t detect:

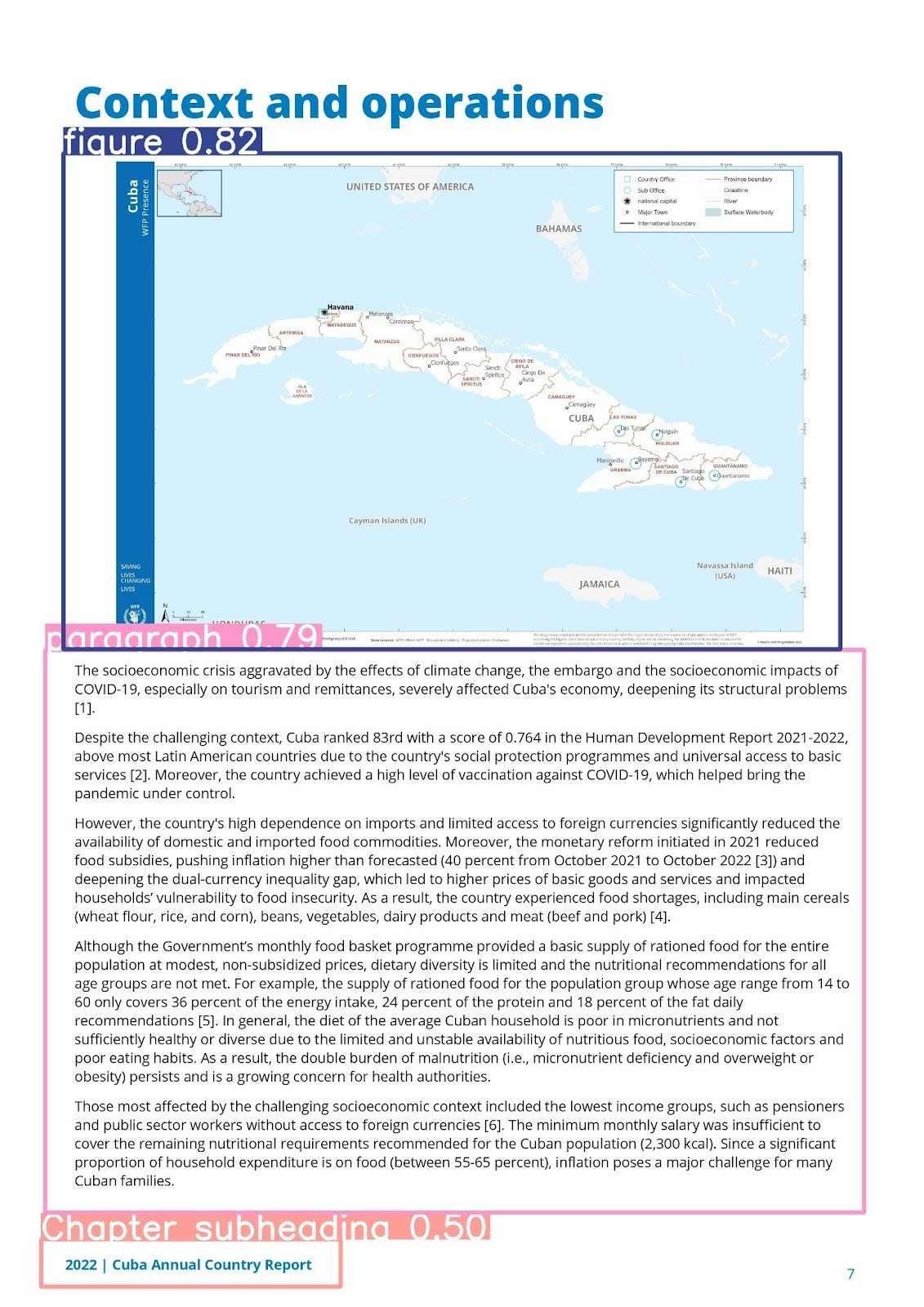

In the first image, a figure was detected with 0.82 confidence, a paragraph with 0.79, and a chapter subheading with 0.5. Now the title was not detected, and the chapter subheading is not really a chapter subheading which explains the low confidence. In the second image, a date is detected with 0.28 confidence which is a false detection. The figure caption detection is a correct detection but it has low confidence, and the table is misdetected as a paragraph with a low confidence of 0.39. As a result, there is still confusion, low confidence, and other problems in Yolov8’s classification. This is because the model was trained on a relatively small amount of scientific papers as in the dataset mentioned above, not our WFP documents with their specific layout and elements. This would mean that for best results WFP documents should be included in the training dataset, or we should have a bigger more comprehensive datasets so the model can generalize better in testing situations. Yet results are good, but must be better. For that end, we can train models to detect each of the objects discussed before: tables, graphs, and paragraphs. For tables, Microsoft’s TATR (Table Transformer) was used with impressive results yet still in-need of fine-tuning as shown below:

In the first image, all the tables were detected and the bounding boxes cover them fully. However, the second page contained overlapping detections and indicated a great deal of confusion given the peculiar layout of the tables in this document. Together, the detections still missed the firstmost row and the two lowermost rows. Yolov8 was trained on another dataset from roboflow containing charts, however still the dataset is small and the model should be exposed to the graphs in the ACRs to perform better. But the most suitable course of action is to train a yolo model on a large dataset containing a variety of document pages manually labeled.

Data to the rescue

The thing that will solve all of these problems is training the model with appropriate data. The appropriateness of the data can be achieved either by having:

WFP specific data

Large comprehensive dataset

WFP specific documents will lead to precise results because this whole task can be divided into pipelines of object detection models for each specific kind of documents like Annual Reports and others. The logistics of creating a new labeled dataset of images of pages of each document type is the biggest challenge here. It is very time consuming, and for this we need to explore a model-aided document labeling. The models which will be used for this are still vision models performing the task of labeling or classification. However, these models will have to be what is called “zero-shot” or “one-shot” object detectors like CLIP and OWL which classify and detect objects in an image without having seen them before. These will help in the quick labeling of your images. Roboflow allows this service, but this is yet to be explored.

The second and the better solution is to train a yolo model on any of the following datasets:

DocBank: DocBank is a large-scale document image dataset annotated with layout structures, including sections, titles, paragraphs, tables, figures, and equations. It contains over 500,000 document images from various sources, such as scientific papers, news articles, and business reports.

DocLayNet: DocLayNet provides page-by-page layout segmentation ground-truth using bounding-boxes for 11 distinct class labels on 80863 unique pages from 6 document categories. It provides several unique features compared to related work such as PubLayNet or DocBank:

PubLayNet: PubLayNet is a large-scale dataset for document layout analysis, containing over one million document pages from PubMed Central (PMC). It includes labeled annotations for document regions such as titles, abstracts, authors, affiliations, text bodies, figures, and tables.

GROTOAP2: GROTOAP2 is a dataset for table detection and recognition in documents. It contains labeled annotations for document pages, including tables, table structure, and cell content. The dataset includes various document types, such as scientific articles, reports, and forms.

USTB-DLSL: The USTB Document Layout Structure Library (USTB-DLSL) is a dataset containing document images with annotations for layout structures, including titles, headings, paragraphs, images, tables, and captions. It is primarily focused on Chinese document layout analysis.

TableBank: TableBank is a dataset for table detection and recognition in documents, containing over 417,000 labeled tables from various sources, including scientific papers, business reports, and patents.

Marmot: Marmot is a dataset specifically designed for chart and graph detection in scholarly articles. It includes annotations for chart bounding boxes and types (e.g., line chart, bar chart) in articles from the arXiv preprint repository. While not as large as other datasets, it provides detailed annotations tailored for chart detection tasks.

ChartSense: ChartSense is a dataset that focuses on chart detection and classification in scientific articles. It contains annotations for chart bounding boxes and chart types (e.g., line chart, scatter plot) in articles from arXiv and PubMed Central. While the dataset size may be modest, it offers detailed annotations suitable for chart detection research.

All of these datasets can take document processing to the next level when training yolo models on each specific task or on a generalized task of layout analysis through object detection. This will increase accuracy and improve results while minimizing information loss and avoiding problematic detections. This is better than having WFP specific data because annotating a large enough amount of document pages will be very time consuming and can be erroneous. The only drawback is that the training will be computationally expensive as with training any large state-of-the-art model. For now, there is a yolov8 model trained on a subset of the DocLayNet dataset available on Huggingface and will be used in the next step. The model can be found here. You can try out the capabilities of this model here.

Conclusion

All of what has been discussed is part of a twofold process. This twofold process has been referred to before as “information extraction and information communication.” Now that we have been able to delicately and precisely extract information containers from the depths of WFP’s unstructured data, we can feed them in a into a LLM like Llama, Gemini, GPT, and many more for processes like question answering, summarization, and hopefully more. This “feeding into LLM” can take two forms: fine-tuning, and contexts for generated answers. Both are viable options yielding good results in terms of information communication.